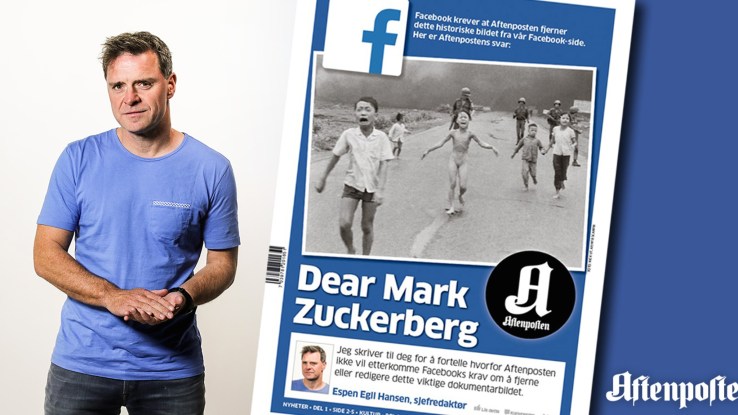

Facebook has backtracked on its decision to censor a Norwegian journalist and newspaper’s posts, reinstating the nude photo of a child fleeing napalm during the Vietnam war. Facebook had originally told The Guardian that “We try to find the right balance between enabling people to express themselves while maintaining a safe and respectful experience for our global community.”

Now Facebook’s statement to TechCrunch (emphasis ours) is that:

“After hearing from our community, we looked again at how our Community Standards were applied in this case. An image of a naked child would normally be presumed to violate our Community Standards, and in some countries might even qualify as child pornography. In this case, we recognize the history and global importance of this image in documenting a particular moment in time. Because of its status as an iconic image of historical importance, the value of permitting sharing outweighs the value of protecting the community by removal, so we have decided to reinstate the image on Facebook where we are aware it has been removed. We will also adjust our review mechanisms to permit sharing of the image going forward. It will take some time to adjust these systems but the photo should be available for sharing in the coming days. We are always looking to improve our policies to make sure they both promote free expression and keep our community safe, and we will be engaging with publishers and other members of our global community on these important questions going forward.”

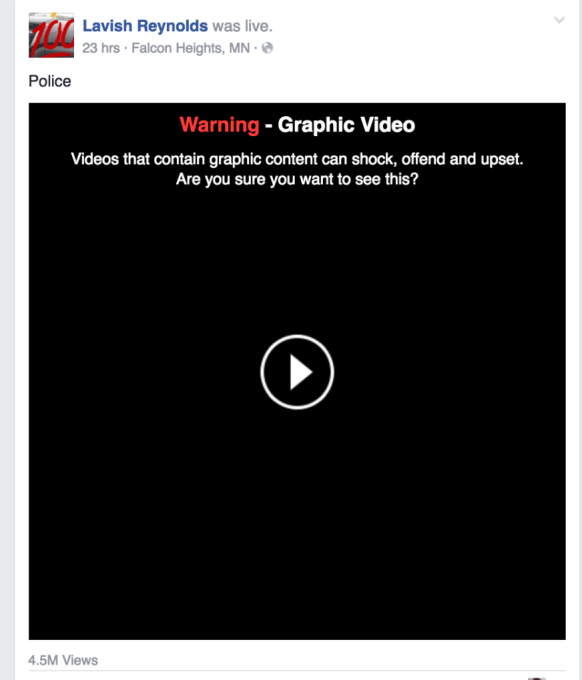

Facebook continues to find itself in problematic situations as it attempts to control what appears in the News Feed. Earlier this year it cited a “technical glitch” for why a Live video of Philando Castile dying after being shot by police disappeared from the social network for several hours before reappearing.

At the time, Mark Zuckerberg said “The images we’ve seen this week are graphic and heartbreaking, and they shine a light on the fear that millions of members of our community live with every day.” That indicated that Facebook saw value in transmitting graphic and potentially disturbing content if it had news value. The company later clarified its policy to me, explaining that it only removes graphic content if it celebrates or glorifies violence.

I’ve recommended that Facebook allow people to report content as graphic but newsworthy so the social network can obscure potentially offensive imagery with a warning that people can click through if they still want to view it. Right now, users can only report content as offensive or graphic, but without the caveat that they think it should stay up. Facebook says people should simply report offensive imagery and it will make the call about what to do with it.

Putting a disclaimer interstitial covering the image would have been a better option in the case of the napalm girl photo, at least until it could decide whether to simply leave it fully visible, instead of deleting the posts containing it.

These censorship blunders combined with the decreasing visibility of news outlets in the feed are surely pushing publishers to reconsider their reliance on Facebook. Unfortunately, most pull too much traffic from Facebook to simply ditch it. Meanwhile, many have already began working with its Instant Articles program that decreases load time in exchange for giving Facebook sweeping control over the visual form of articles and limits the monetization, subscription, and recirculation options publishers typically use on their own sites.

In hindsight, publishers should have been working to deepen direct relationships with their readers rather than jumping head first into a channel they don’t control.

No comments:

Post a Comment